模型库中没有bdcn相关文章,迁移bdcn到mindspore,遇到算子问题

框架:mindspore-gpu2.0 cuda10.1 python3.7

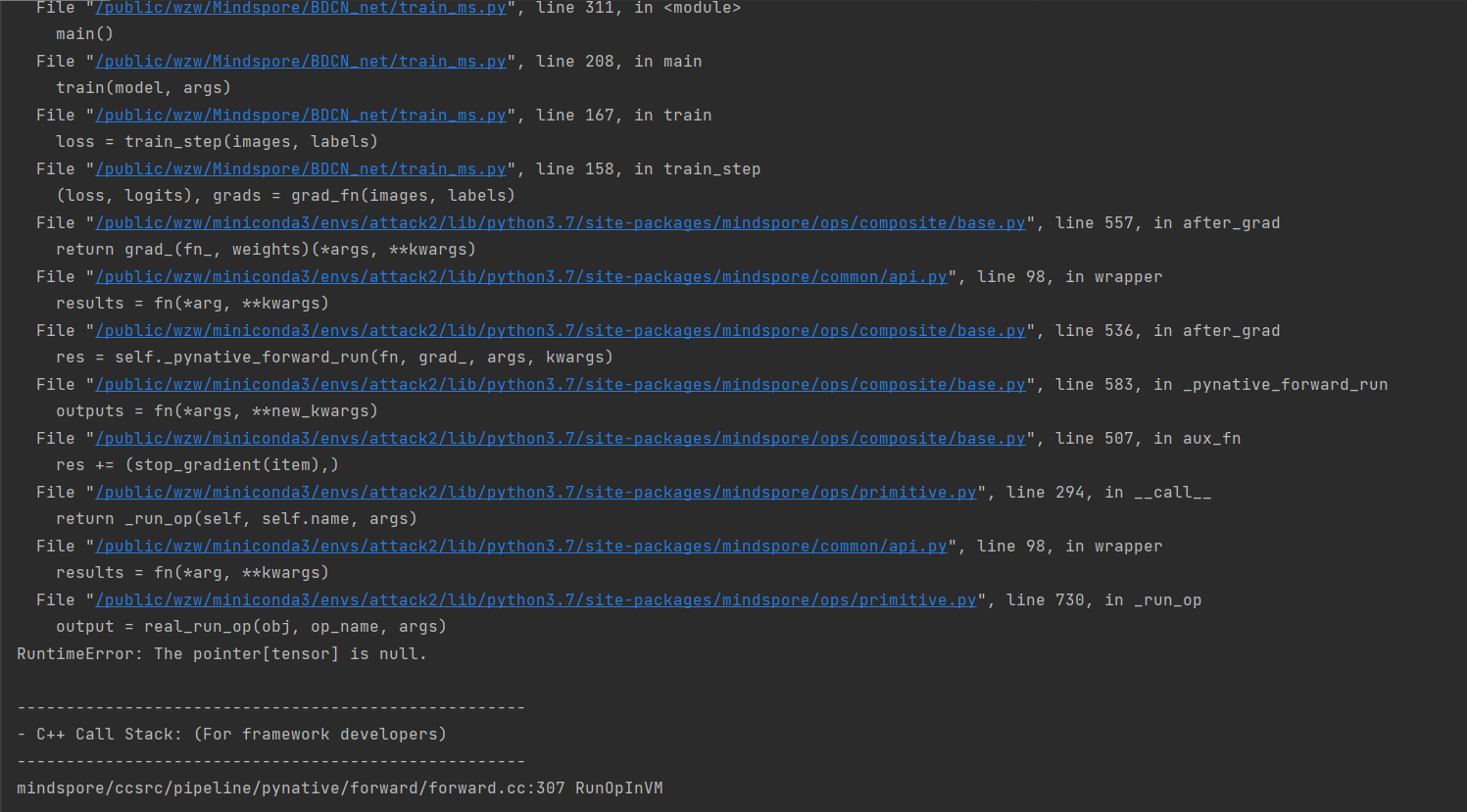

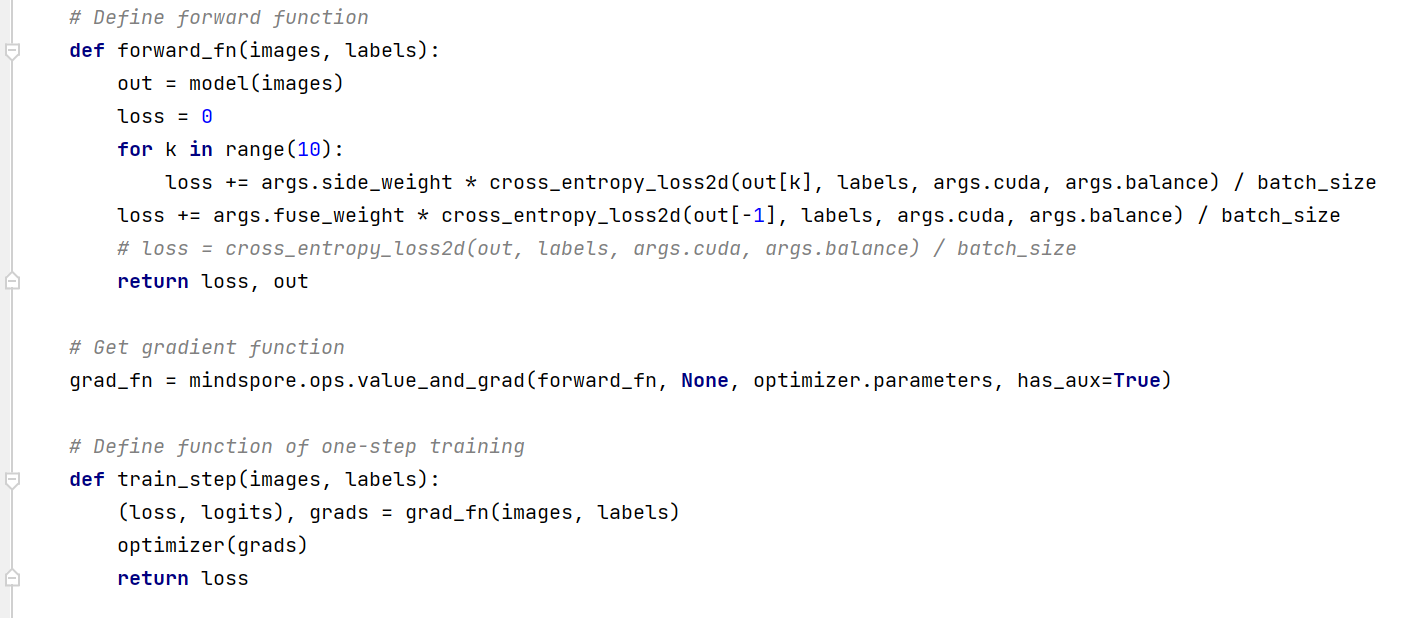

问题:计算loss是会出现指针相关报错

下为代码

import numpy as np

import argparse

import time

import os

import BDCN as bdcn

import cfg

import log

import mindspore

from mindspore import nn

from mindspore import ops

from mindspore import Parameter

import mindspore.context as context

from dataset import GetDatasetGenerator

import mindspore.dataset as ds

# from Transform import SegDataSet

import re

from mindspore import Tensor

import cv2

import random

device_target = 'GPU'

if device_target == 'GPU':

os.environ['CUDA_VISIBLE_DEVICES'] = '7'

context.set_context(device_target="GPU")

# # 2.0版本之前默认静态图传入

context.set_context(mode=context.PYNATIVE_MODE, device_target="GPU")

else:

context.set_context(device_target="CPU")

# # 2.0版本之前默认静态图传入

context.set_context(mode=context.PYNATIVE_MODE, device_target="CPU")

def adjust_learning_rate(optimizer, steps, step_size, gamma=0.1, logger=None):

"""Sets the learning rate to the initial LR decayed by 10 every 30 epochs"""

for param_group in optimizer.param_groups:

param_group['lr'] = param_group['lr'] * gamma

if logger:

logger.info('%s: %s' % (param_group['name'], param_group['lr']))

def cross_entropy_loss2d(inputs, targets, cuda=False, balance=1.1):

"""

:param inputs: inputs is a 4 dimensional data nx1xhxw

:param targets: targets is a 3 dimensional data nx1xhxw

:return:

"""

n, c, h, w = inputs.shape

weights = np.zeros((n, c, h, w))

for i in range(n):

t = targets.asnumpy()[i]

pos = (t == 1).sum()

neg = (t == 0).sum()

valid = neg + pos

weights[i, t == 1] = neg * 1. / valid

weights[i, t == 0] = pos * balance / valid

weights = mindspore.Tensor(weights, dtype=mindspore.float32)

inputs = mindspore.ops.sigmoid(inputs)

loss = nn.BCELoss(weights, reduction='mean')(inputs, targets)

return loss

def train(model, args):

# data_root = r'/public/xdw/lcb/mindspore/BDCN/Data_512/mindrecord'

# trainloader = SegDataSet(mindrecord_root=data_root, batch_size=args.batch_size)

# trainloader = trainloader.get_dataset(repeat=1)

train_img = GetDatasetGenerator()

trainloader = ds.GeneratorDataset(source=train_img, column_names=["images", "labels"])

trainloader = trainloader.shuffle(4)

trainloader = trainloader.batch(args.batch_size)

start_step = 1

mean_loss = []

pos = 0

iter_per_epoch = trainloader.get_dataset_size()

logger = args.logger

logger.info('*' * 40)

logger.info('train images in all are %d ' % iter_per_epoch)

logger.info('*' * 40)

start_time = time.time()

params_dict = dict(model.parameters_and_names())

base_lr = args.base_lr

weight_decay = args.weight_decay

params = []

for key, n in params_dict.items():

v = list()

v.append(n)

if re.match(r'conv[1-5]_[1-3]_down', key):

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 0.1, 'weight_decay': weight_decay * 1}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 0.2, 'weight_decay': weight_decay * 0}]

elif re.match(r'.*conv[1-4]_[1-3]', key):

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 1, 'weight_decay': weight_decay * 1}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 2, 'weight_decay': weight_decay * 0}]

elif re.match(r'.*conv5_[1-3]', key):

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 100, 'weight_decay': weight_decay * 1}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 200, 'weight_decay': weight_decay * 0}]

elif re.match(r'score_dsn[1-5]', key):

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 0.01, 'weight_decay': weight_decay * 1}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 0.02, 'weight_decay': weight_decay * 0}]

elif re.match(r'upsample_[248](_5)?', key):

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 0, 'weight_decay': weight_decay * 0}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 0, 'weight_decay': weight_decay * 0}]

elif re.match(r'.*msblock[1-5]_[1-3]\.conv', key):

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 1, 'weight_decay': weight_decay * 1}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 2, 'weight_decay': weight_decay * 0}]

else:

if 'weight' in key:

params += [{'params': v, 'lr': base_lr * 0.001, 'weight_decay': weight_decay * 1}]

elif 'bias' in key:

params += [{'params': v, 'lr': base_lr * 0.002, 'weight_decay': weight_decay * 0}]

optimizer = nn.SGD(params, momentum=args.momentum,

learning_rate=args.base_lr, weight_decay=args.weight_decay)

# for param_group in optimizer.param_groups:

# if logger:

# logger.info('%s: %s' % (param_group['name'], param_group['lr']))

# if args.cuda:

# model.cuda()

# if args.resume:

# logger.info('resume from %s' % args.resume)

# state = torch.load(args.resume)

# start_step = state['step']

# optimizer.load_state_dict(state['solver'])

# model.load_state_dict(state['param'])

batch_size = args.iter_size * args.batch_size

# Define forward function

def forward_fn(images, labels):

out = model(images)

loss = 0

for k in range(10):

loss += args.side_weight * cross_entropy_loss2d(out[k], labels, args.cuda, args.balance) / batch_size

loss += args.fuse_weight * cross_entropy_loss2d(out[-1], labels, args.cuda, args.balance) / batch_size

# loss = cross_entropy_loss2d(out, labels, args.cuda, args.balance) / batch_size

return loss, out

# Get gradient function

grad_fn = mindspore.ops.value_and_grad(forward_fn, None, optimizer.parameters, has_aux=True)

# Define function of one-step training

def train_step(images, labels):

(loss, logits), grads = grad_fn(images, labels)

optimizer(grads)

return loss

for step in range(start_step, args.max_iter + 1):

batch_loss = 0

model.set_train()

for batch, (images, labels) in enumerate(trainloader.create_tuple_iterator()):

loss = train_step(images, labels)

if batch % 5 == 0:

loss = loss.asnumpy()

print(f"loss: {loss:>7f} [{batch:>3d}/{iter_per_epoch:>3d}]")

if step % args.step_size == 0:

adjust_learning_rate(optimizer, step, args.step_size, args.gamma)

if step % args.snapshots == 0:

mindspore.save_checkpoint(model, '{}/bdcn_step{}.pth'.format(args.param_dir, step))

print("****** saving ckpt in param ******")

if len(mean_loss) < args.average_loss:

mean_loss.append(batch_loss)

else:

mean_loss[pos] = batch_loss

pos = (pos + 1) % args.average_loss

if step % args.display == 0:

tm = time.time() - start_time

print("time: ", tm, " mean loss: ", mean_loss)

start_time = time.time()

def main():

args = parse_args()

logger = log.get_logger(args.log)

args.logger = logger

logger.info('*' * 80)

logger.info('the args are the below')

logger.info('*' * 80)

for x in args.__dict__:

logger.info(x + ',' + str(args.__dict__[x]))

logger.info(cfg.config[args.dataset])

logger.info('*' * 80)

# os.environ['CUDA_VISIBLE_DEVICES'] = args.gpu

if not os.path.exists(args.param_dir):

os.mkdir(args.param_dir)

# torch.manual_seed(int(time.time())) # long

model = bdcn.BDCN(pretrain=args.pretrain, logger=logger)

# if args.complete_pretrain:

# model.load_state_dict(torch.load(args.complete_pretrain))

logger.info(model)

train(model, args)

def parse_args():

parser = argparse.ArgumentParser(description='Train BDCN for different args')

parser.add_argument('-d', '--dataset', type=str, choices=cfg.config.keys(),

default='bsds500', help='The dataset to train')

parser.add_argument('--param-dir', type=str, default='params',

help='the directory to store the params')

parser.add_argument('--lr', dest='base_lr', type=float, default=1e-4,

help='the base learning rate of model default 1e-6')

parser.add_argument('-m', '--momentum', type=float, default=0.9,

help='the momentum')

parser.add_argument('-c', '--cuda', action='store_true',

help='whether use gpu to train network')

parser.add_argument('-g', '--gpu', type=str, default='0',

help='the gpu id to train net')

parser.add_argument('--weight-decay', type=float, default=0.0002,

help='the weight_decay of net')

parser.add_argument('-r', '--resume', type=str, default=None,

help='whether resume from some, default is None')

parser.add_argument('-p', '--pretrain', type=str, default=None,

help='init net from pretrained model default is None')

parser.add_argument('--max-iter', type=int, default=50,

help='max iters to train network, default is 40000')

parser.add_argument('--iter-size', type=int, default=10,

help='iter size equal to the batch size, default 10')

parser.add_argument('--average-loss', type=int, default=50,

help='smoothed loss, default is 50')

parser.add_argument('-s', '--snapshots', type=int, default=10,

help='how many iters to store the params, default is 1000')

parser.add_argument('--step-size', type=int, default=10000,

help='the number of iters to decrease the learning rate, default is 10000')

parser.add_argument('--display', type=int, default=20,

help='how many iters display one time, default is 20')

parser.add_argument('-b', '--balance', type=float, default=1.1,

help='the parameter to balance the neg and pos, default is 1.1')

parser.add_argument('-l', '--log', type=str, default='log.txt',

help='the file to store log, default is log.txt')

parser.add_argument('-k', type=int, default=1,

help='the k-th split set of multicue')

parser.add_argument('--batch-size', type=int, default=1,

help='batch size of one iteration, default 1')

parser.add_argument('--crop-size', type=int, default=None,

help='the size of image to crop, default not crop')

parser.add_argument('--yita', type=float, default=None,

help='the param to operate gt, default is data in the config file')

parser.add_argument('--complete-pretrain', type=str, default=None,

help='finetune on the complete_pretrain, default None')

parser.add_argument('--side-weight', type=float, default=0.5,

help='the loss weight of sideout, default 0.5')

parser.add_argument('--fuse-weight', type=float, default=1.1,

help='the loss weight of fuse, default 1.1')

parser.add_argument('--gamma', type=float, default=0.1,

help='the decay of learning rate, default 0.1')

return parser.parse_args()

if __name__ == '__main__':

main()

Please assign maintainer to check this issue.

请为此issue分配处理人。

@fangwenyi @chengxiaoli @wuweikang

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

Please add labels (comp or sig), also you can visit https://gitee.com/mindspore/community/blob/master/sigs/dx/docs/labels.md to find more.

为了让代码尽快被审核,请您为Pull Request打上 组件(comp)或兴趣组(sig) 标签,打上标签的PR可直接推送给责任人进行审核。

更多的标签可以查看https://gitee.com/mindspore/community/blob/master/sigs/dx/docs/labels.md

以组件相关代码提交为例,如果你提交的是data组件代码,你可以这样评论:

//comp/data

当然你也可以邀请data SIG组来审核代码,可以这样写:

//sig/data

另外你还可以给这个PR标记类型,例如是bugfix或者是特性需求:

//kind/bug or //kind/feature

恭喜你,你已经学会了使用命令来打标签,接下来就在下面的评论里打上标签吧!

issue:I6K1N0 已经记录了相同的问题,此issue为重复提问,当前关闭处理,如有问题,可以反馈下具体信息,并将ISSUE状态修改为WIP,我们这边会进一步跟踪,谢谢。

登录 后才可以发表评论