代码拉取完成,页面将自动刷新

问题描述:推理回答乱码,全是“犬”字

服务器:atlas800(9000)

NPU:910A*8

cann:7.0RC1

mindformers:r1.0

baichuan2:13B-chat

参考手册“自动权重转换,推理案例三:完整权重自动切分为2卡分布式权重”,进行自动权重转换,8卡分布式。指令如下:

bash run_distribute.sh "/root/mindformers-r1.0/hccl_8p_01234567_127.0.1.1.json" "/root/mindformers-r1.0/research/baichuan2/run_baichuan2_13b.yaml" [0,8] predict "I love beijing, because"

run_baichuan2_13b.yaml内容如下:

seed: 0

output_dir: './output' # path to save checkpoint/strategy

load_checkpoint: '/root/huggingface/Baichuan2-13B-Chat-mindformers/distributed/'

src_strategy_path_or_dir: ''

auto_trans_ckpt: False # If true, auto transform load_checkpoint to load in distributed model

only_save_strategy: False

resume_training: False

run_mode: 'predict'

# trainer config

trainer:

type: CausalLanguageModelingTrainer

model_name: 'baichuan2_13b'

# if True, do evaluate during the training process. if false, do nothing.

# note that the task trainer should support _evaluate_in_training function.

do_eval: False

# runner config

runner_config:

epochs: 1

batch_size: 2

sink_mode: True

sink_size: 2

# optimizer

optimizer:

type: FP32StateAdamWeightDecay

beta1: 0.9

beta2: 0.95

eps: 1.e-8

# lr sechdule

lr_schedule:

type: CosineWithWarmUpLR

learning_rate: 2.e-5 # pretrain:3.e-4

lr_end: 1.e-6 # pretrain:3.e-5

warmup_ratio: 0.03

total_steps: -1 # -1 means it will load the total steps of the dataset

# dataset

train_dataset: &train_dataset

data_loader:

type: MindDataset

dataset_dir: ""

shuffle: True

input_columns: ["input_ids", "labels"] # "input_ids", "labels" , labels are used in instruction finetune.

num_parallel_workers: 8

python_multiprocessing: False

drop_remainder: True

repeat: 1

numa_enable: False

prefetch_size: 1

train_dataset_task:

type: CausalLanguageModelDataset

dataset_config: *train_dataset

# eval dataset

eval_dataset: &eval_dataset

data_loader:

type: MindDataset

dataset_dir: ""

shuffle: False

input_columns: ["input_ids", "labels"]

num_parallel_workers: 8

python_multiprocessing: False

drop_remainder: False

repeat: 1

numa_enable: False

prefetch_size: 1

eval_dataset_task:

type: CausalLanguageModelDataset

dataset_config: *eval_dataset

use_parallel: True

# parallel context config

parallel:

parallel_mode: 1 # 0-data parallel, 1-semi-auto parallel, 2-auto parallel, 3-hybrid parallel

gradients_mean: False

enable_alltoall: False

full_batch: True

search_mode: "sharding_propagation"

enable_parallel_optimizer: True

strategy_ckpt_save_file: "./ckpt_strategy.ckpt"

parallel_optimizer_config:

gradient_accumulation_shard: False

parallel_optimizer_threshold: 64

# default parallel of device num = 16 for Atlas 800

parallel_config:

data_parallel: 1

model_parallel: 8

pipeline_stage: 1

use_seq_parallel: False

micro_batch_num: 1

vocab_emb_dp: True

gradient_aggregation_group: 4

# when model parallel is greater than 1, we can set micro_batch_interleave_num=2, that may accelerate the train process.

micro_batch_interleave_num: 1

# recompute config

recompute_config:

recompute: True

select_recompute: False

parallel_optimizer_comm_recompute: False

mp_comm_recompute: True

recompute_slice_activation: True

# callbacks

callbacks:

- type: MFLossMonitor

- type: CheckpointMointor

prefix: "baichuan2_13b"

save_checkpoint_steps: 1000

keep_checkpoint_max: 5

integrated_save: False

async_save: False

- type: ObsMonitor

# mindspore context init config

context:

mode: 0 #0--Graph Mode; 1--Pynative Mode

device_target: "Ascend"

enable_graph_kernel: False

graph_kernel_flags: "--disable_expand_ops=Softmax,Dropout --enable_parallel_fusion=true --reduce_fuse_depth=8 --enable_auto_tensor_inplace=true"

max_call_depth: 10000

max_device_memory: "31GB"

save_graphs: False

save_graphs_path: "./graph"

device_id: 0

# model config

model:

model_config:

type: LlamaConfig

batch_size: 1 # add for increase predict

seq_length: 512

hidden_size: 5120

num_layers: 40

num_heads: 40

vocab_size: 125696

multiple_of: 128

rms_norm_eps: 1.0e-6

bos_token_id: 1

eos_token_id: 2

pad_token_id: 0

ignore_token_id: -100

compute_dtype: "float16"

layernorm_compute_type: "float32"

softmax_compute_type: "float32"

param_init_type: "float16"

use_past: True

pretrain_seqlen: 2048 # seqlen of the pretrain checkpoint: 2048 for llama and 4096 for llama2

extend_method: "None" # support "None", "PI", "NTK"

compute_in_2d: False

use_flash_attention: False

offset: 0

use_past_shard: False

checkpoint_name_or_path: "path/to/baichuan2-13B-Chat.ckpt"

repetition_penalty: 1

temperature: 1.0

max_decode_length: 512

top_k: 3

top_p: 1

do_sample: False

arch:

type: Baichuan13BV2ForCausalLM

processor:

return_tensors: ms

tokenizer:

vocab_file: "/root/huggingface/Baichuan2-13B-Chat-mindformers/tokenizer.model"

unk_token: '<unk>'

bos_token: '<s>'

eos_token: '</s>'

pad_token: '<unk>'

type: Baichuan2Tokenizer

type: LlamaProcessor

# metric

metric:

type: PerplexityMetric

# wrapper cell config

runner_wrapper:

type: MFTrainOneStepCell

scale_sense:

type: DynamicLossScaleUpdateCell

loss_scale_value: 65536

scale_factor: 2

scale_window: 1000

use_clip_grad: True

eval_callbacks:

- type: ObsMonitor

auto_tune: False

filepath_prefix: './autotune'

autotune_per_step: 10

profile: False

profile_start_step: 1

profile_stop_step: 10

init_start_profile: False

profile_communication: False

profile_memory: True

layer_scale: False

layer_decay: 0.65

lr_scale_factor: 256

# aicc

remote_save_url: "Please input obs url on AICC platform."

日志如下:

[INFO] 2024-05-06 06:48:49,901 [mindformers/tools/utils.py:153] set_output_path: set output path to '/root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2'

[INFO] 2024-05-06 06:48:52,343 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:109] main: .........Build context config..........

[INFO] 2024-05-06 06:48:52,344 [mindformers/core/parallel_config.py:45] build_parallel_config: initial recompute_config from dict: {'recompute': True, 'select_recompute': False, 'parallel_optimizer_comm_recompute': False, 'mp_comm_recompute': True, 'recompute_slice_activation': True}

[INFO] 2024-05-06 06:48:52,344 [mindformers/core/parallel_config.py:51] build_parallel_config: initial parallel_config from dict: {'data_parallel': 1, 'model_parallel': 8, 'pipeline_stage': 1, 'use_seq_parallel': False, 'micro_batch_num': 1, 'vocab_emb_dp': True, 'gradient_aggregation_group': 4}

[INFO] 2024-05-06 06:48:52,345 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:111] main: context config is: [ParallelConfig]

_recompute:[ParallelConfig]

_recompute:True

_select_recompute:False

_parallel_optimizer_comm_recompute:False

_mp_comm_recompute:True

_recompute_slice_activation:True

select_recompute:False

use_seq_parallel:False

_gradient_aggregation_group:4

_embed_dp_mp_config:[ParallelConfig]

_dp_mp_config:[ParallelConfig]

_data_parallel:1

_model_parallel:8

use_seq_parallel:False

select_recompute:False

_vocab_emb_dp:True

use_seq_parallel:False

select_recompute:False

_pp_config:[ParallelConfig]

_pipeline_stage:1

_micro_batch_num:1

_moe_config:[ParallelConfig]

_dpmp:[ParallelConfig]

_data_parallel:1

_model_parallel:8

use_seq_parallel:False

select_recompute:False

_expert_parallel:1

use_seq_parallel:False

select_recompute:False

[INFO] 2024-05-06 06:48:52,346 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:112] main: moe config is: <mindformers.modules.transformer.moe.MoEConfig object at 0xfffecd2b5b50>

[INFO] 2024-05-06 06:48:52,346 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:46] update_checkpoint_config: Leave load_checkpoint may because:

[INFO] 2024-05-06 06:48:52,346 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:47] update_checkpoint_config: 1. resume training need resume training info.

[INFO] 2024-05-06 06:48:52,346 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:48] update_checkpoint_config: 2. need load distributed shard checkpoint.

[INFO] 2024-05-06 06:48:52,347 [mindformers/trainer/base_trainer.py:85] __init__: Now Running Task is: text_generation, Model is: baichuan2_13b

[INFO] 2024-05-06 06:48:52,347 [mindformers/trainer/base_trainer.py:191] _check_global_batch_size_for_auto_parallel: The current parallel mode is semi_auto_parallel, full batch is True,so global batch size will be changed: global_batch_size = batch_size * data_parallel * micro_batch_interleave_num * gradient_accumulation_steps = 2 = 2 * 1 * 1 * 1

[INFO] 2024-05-06 06:48:52,348 [mindformers/trainer/base_trainer.py:383] create_network: .........Build Network From Config..........

[WARNING] 2024-05-06 06:48:52,349 [mindformers/models/llama/llama_config.py:182] __init__: Argument `pretrain_seqlen` is deprecated. Use `scaling_factor` instead.

[WARNING] 2024-05-06 06:48:52,349 [mindformers/models/llama/llama_config.py:185] __init__: Argument `compute_in_2d` is deprecated.

[WARNING] 2024-05-06 06:48:52,349 [mindformers/models/llama/llama_config.py:188] __init__: Argument `use_past_shard` is deprecated.

[INFO] 2024-05-06 06:48:52,351 [mindformers/version_control.py:60] decorator: The Cell Reuse compilation acceleration feature is not supported when the environment variable ENABLE_CELL_REUSE is 0 or MindSpore version is earlier than 2.1.0 or stand_alone mode or pipeline_stages <= 1

[INFO] 2024-05-06 06:48:52,352 [mindformers/version_control.py:64] decorator:

The current ENABLE_CELL_REUSE=0, please set the environment variable as follows:

export ENABLE_CELL_REUSE=1 to enable the Cell Reuse compilation acceleration feature.

[INFO] 2024-05-06 06:48:52,352 [mindformers/version_control.py:73] decorator: The Cell Reuse compilation acceleration feature only works in pipeline parallel mode(pipeline_stage>1).Current pipeline stage=1, the feature is disabled by default.

[WARNING] 2024-05-06 06:48:52,440 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,529 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,600 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,670 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,742 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,813 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,884 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:52,956 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:53,027 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 06:48:53,100 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[INFO] 2024-05-06 06:48:55,555 [mindformers/models/base_model.py:117] load_checkpoint: model built, but weights is unloaded, since the config has no checkpoint_name_or_path attribute or checkpoint_name_or_path is None.

[INFO] 2024-05-06 06:48:55,567 [mindformers/trainer/base_trainer.py:534] count_parameters: Network Parameters: 13896 M.

[INFO] 2024-05-06 06:48:56,008 [mindformers/trainer/utils.py:323] transform_and_load_checkpoint: .........Building model.........

[INFO] 2024-05-06 06:51:18,015 [mindformers/trainer/utils.py:336] transform_and_load_checkpoint: /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2 is_share_disk: False

[INFO] 2024-05-06 06:51:18,017 [mindformers/trainer/utils.py:337] transform_and_load_checkpoint: world_size: 8

[INFO] 2024-05-06 06:51:18,019 [mindformers/trainer/utils.py:518] get_src_and_dst_strategy: .........Collecting strategy.........

[INFO] 2024-05-06 06:51:18,020 [mindformers/trainer/utils.py:525] get_src_and_dst_strategy: pipeline_stage = 1, strategy using /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2/strategy/ckpt_strategy_rank_0.ckpt

[INFO] 2024-05-06 06:51:18,024 [mindformers/trainer/utils.py:435] make_softlink: Make soft link of checkpoint file from /root/huggingface/Baichuan2-13B-Chat-mindformers/single/rank_0/Baichuan2-13B-Chat.ckpt to /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2/softlink_ckpt/Baichuan2-13B-Chat/rank_0/Baichuan2-13B-Chat.ckpt

[INFO] 2024-05-06 06:51:18,027 [mindformers/trainer/utils.py:565] transform_ckpt: .........Transforming ckpt.........

[INFO] 2024-05-06 06:51:18,028 [mindformers/trainer/utils.py:566] transform_ckpt: Src ckpt strategy: None

[INFO] 2024-05-06 06:51:18,028 [mindformers/trainer/utils.py:567] transform_ckpt: Src ckpt: /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2/softlink_ckpt/Baichuan2-13B-Chat

[INFO] 2024-05-06 06:51:18,028 [mindformers/trainer/utils.py:568] transform_ckpt: Dst ckpt strategy: /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2/strategy/ckpt_strategy_rank_0.ckpt

[INFO] 2024-05-06 06:51:18,028 [mindformers/trainer/utils.py:569] transform_ckpt: Dst ckpt: /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2/transformed_checkpoint/Baichuan2-13B-Chat

[INFO] 2024-05-06 06:57:13,740 [mindformers/trainer/utils.py:576] transform_ckpt: .........Transform succeed!.........

[INFO] 2024-05-06 06:57:13,742 [mindformers/trainer/utils.py:708] show_progress: Transforming checkpoint: |▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮▮|100%

[INFO] 2024-05-06 06:57:13,743 [mindformers/trainer/utils.py:714] load_ckpt: .............Start load checkpoint from checkpoint..................

[INFO] 2024-05-06 06:57:13,743 [mindformers/trainer/utils.py:245] load_distributed_checkpoint: When distributed loads are sliced weights,load_checkpoint should be a checkpoint directory containing the directory of rank_{0-*},The directory structure is as follows: **checkpoint_root_dir/rank_{0-*}/**.ckpt

[INFO] 2024-05-06 06:57:24,938 [mindformers/trainer/utils.py:258] load_distributed_checkpoint: Distribute load is success.

[INFO] 2024-05-06 06:57:24,938 [mindformers/trainer/utils.py:721] load_ckpt: loaded checkpoint: /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed-2/transformed_checkpoint/Baichuan2-13B-Chat

[INFO] 2024-05-06 06:57:29,405 [mindformers/trainer/utils.py:745] load_ckpt: Network parameters are not loaded: (['model.layers.0.attention.kvcache_mgr.key_past', 'model.layers.0.attention.kvcache_mgr.value_past', 'model.layers.1.attention.kvcache_mgr.key_past', 'model.layers.1.attention.kvcache_mgr.value_past', 'model.layers.2.attention.kvcache_mgr.key_past', 'model.layers.2.attention.kvcache_mgr.value_past', 'model.layers.3.attention.kvcache_mgr.key_past', 'model.layers.3.attention.kvcache_mgr.value_past', 'model.layers.4.attention.kvcache_mgr.key_past', 'model.layers.4.attention.kvcache_mgr.value_past', 'model.layers.5.attention.kvcache_mgr.key_past', 'model.layers.5.attention.kvcache_mgr.value_past', 'model.layers.6.attention.kvcache_mgr.key_past', 'model.layers.6.attention.kvcache_mgr.value_past', 'model.layers.7.attention.kvcache_mgr.key_past', 'model.layers.7.attention.kvcache_mgr.value_past', 'model.layers.8.attention.kvcache_mgr.key_past', 'model.layers.8.attention.kvcache_mgr.value_past', 'model.layers.9.attention.kvcache_mgr.key_past', 'model.layers.9.attention.kvcache_mgr.value_past', 'model.layers.10.attention.kvcache_mgr.key_past', 'model.layers.10.attention.kvcache_mgr.value_past', 'model.layers.11.attention.kvcache_mgr.key_past', 'model.layers.11.attention.kvcache_mgr.value_past', 'model.layers.12.attention.kvcache_mgr.key_past', 'model.layers.12.attention.kvcache_mgr.value_past', 'model.layers.13.attention.kvcache_mgr.key_past', 'model.layers.13.attention.kvcache_mgr.value_past', 'model.layers.14.attention.kvcache_mgr.key_past', 'model.layers.14.attention.kvcache_mgr.value_past', 'model.layers.15.attention.kvcache_mgr.key_past', 'model.layers.15.attention.kvcache_mgr.value_past', 'model.layers.16.attention.kvcache_mgr.key_past', 'model.layers.16.attention.kvcache_mgr.value_past', 'model.layers.17.attention.kvcache_mgr.key_past', 'model.layers.17.attention.kvcache_mgr.value_past', 'model.layers.18.attention.kvcache_mgr.key_past', 'model.layers.18.attention.kvcache_mgr.value_past', 'model.layers.19.attention.kvcache_mgr.key_past', 'model.layers.19.attention.kvcache_mgr.value_past', 'model.layers.20.attention.kvcache_mgr.key_past', 'model.layers.20.attention.kvcache_mgr.value_past', 'model.layers.21.attention.kvcache_mgr.key_past', 'model.layers.21.attention.kvcache_mgr.value_past', 'model.layers.22.attention.kvcache_mgr.key_past', 'model.layers.22.attention.kvcache_mgr.value_past', 'model.layers.23.attention.kvcache_mgr.key_past', 'model.layers.23.attention.kvcache_mgr.value_past', 'model.layers.24.attention.kvcache_mgr.key_past', 'model.layers.24.attention.kvcache_mgr.value_past', 'model.layers.25.attention.kvcache_mgr.key_past', 'model.layers.25.attention.kvcache_mgr.value_past', 'model.layers.26.attention.kvcache_mgr.key_past', 'model.layers.26.attention.kvcache_mgr.value_past', 'model.layers.27.attention.kvcache_mgr.key_past', 'model.layers.27.attention.kvcache_mgr.value_past', 'model.layers.28.attention.kvcache_mgr.key_past', 'model.layers.28.attention.kvcache_mgr.value_past', 'model.layers.29.attention.kvcache_mgr.key_past', 'model.layers.29.attention.kvcache_mgr.value_past', 'model.layers.30.attention.kvcache_mgr.key_past', 'model.layers.30.attention.kvcache_mgr.value_past', 'model.layers.31.attention.kvcache_mgr.key_past', 'model.layers.31.attention.kvcache_mgr.value_past', 'model.layers.32.attention.kvcache_mgr.key_past', 'model.layers.32.attention.kvcache_mgr.value_past', 'model.layers.33.attention.kvcache_mgr.key_past', 'model.layers.33.attention.kvcache_mgr.value_past', 'model.layers.34.attention.kvcache_mgr.key_past', 'model.layers.34.attention.kvcache_mgr.value_past', 'model.layers.35.attention.kvcache_mgr.key_past', 'model.layers.35.attention.kvcache_mgr.value_past', 'model.layers.36.attention.kvcache_mgr.key_past', 'model.layers.36.attention.kvcache_mgr.value_past', 'model.layers.37.attention.kvcache_mgr.key_past', 'model.layers.37.attention.kvcache_mgr.value_past', 'model.layers.38.attention.kvcache_mgr.key_past', 'model.layers.38.attention.kvcache_mgr.value_past', 'model.layers.39.attention.kvcache_mgr.key_past', 'model.layers.39.attention.kvcache_mgr.value_past'], [])

[WARNING] 2024-05-06 06:57:29,448 [mindformers/generation/text_generator.py:1099] generate: When do_sample is set to False, top_k will be set to 1 and top_p will be set to 0, making them inactive.

[INFO] 2024-05-06 06:57:29,449 [mindformers/generation/text_generator.py:1103] generate: Generation Config is: {'max_length': 512, 'max_new_tokens': None, 'num_beams': 1, 'do_sample': False, 'use_past': True, 'temperature': 1.0, 'top_k': 0, 'top_p': 1.0, 'repetition_penalty': 1, 'encoder_repetition_penalty': 1.0, 'renormalize_logits': False, 'pad_token_id': 0, 'bos_token_id': 1, 'eos_token_id': 2, '_from_model_config': True}

[INFO] 2024-05-06 06:57:29,449 [mindformers/generation/text_generator.py:176] _get_generation_mode: The generation mode will be **GREEDY_SEARCH**.

[INFO] 2024-05-06 07:02:04,564 [mindformers/generation/text_generator.py:478] _greedy_search: total time: 275.1143374443054 s; generated tokens: 506 tokens; generate speed: 1.8392352964971717 tokens/s

[INFO] 2024-05-06 07:02:04,612 [mindformers/trainer/base_trainer.py:940] predict_process: output result is: [{'text_generation_text': ['I love beijing, because犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬']}]

[INFO] 2024-05-06 07:02:04,612 [mindformers/trainer/base_trainer.py:941] predict_process: output result is saved at: text_generation_result.txt

[INFO] 2024-05-06 07:02:04,612 [mindformers/trainer/base_trainer.py:942] predict_process: .........Predict Over!.............

基于高阶接口8卡分布式推理时,加上prompt,同样出现上述问题。指令:

bash ./run_singlenode.sh "python baichuan2/run_baichuan2.py --config baichuan2/run_baichuan2_13b.yaml --run_mode predict --use_parallel True --load_checkpoint /root/huggingface/Baichuan2-13B-Chat-mindformers/distributed/ --auto_trans_ckpt False --predict_data <reserved_106>"你是谁"<reserved_107>" /root/mindformers-r1.0/hccl_8p_01234567_127.0.1.1.json [0,8] 8

日志:

[INFO] 2024-05-06 07:25:38,176 [mindformers/tools/utils.py:153] set_output_path: set output path to '/root/mindformers-r1.0/research/output'

[INFO] 2024-05-06 07:25:38,176 [mindformers/trainer/base_trainer.py:85] __init__: Now Running Task is: text_generation, Model is: baichuan2_13b

[INFO] 2024-05-06 07:25:38,176 [mindformers/core/parallel_config.py:45] build_parallel_config: initial recompute_config from dict: {'recompute': True, 'select_recompute': False, 'parallel_optimizer_comm_recompute': False, 'mp_comm_recompute': True, 'recompute_slice_activation': True}

[INFO] 2024-05-06 07:25:38,177 [mindformers/core/parallel_config.py:51] build_parallel_config: initial parallel_config from dict: {'data_parallel': 1, 'model_parallel': 8, 'pipeline_stage': 1, 'use_seq_parallel': False, 'micro_batch_num': 1, 'vocab_emb_dp': True, 'gradient_aggregation_group': 4}

[INFO] 2024-05-06 07:25:38,177 [mindformers/trainer/base_trainer.py:191] _check_global_batch_size_for_auto_parallel: The current parallel mode is semi_auto_parallel, full batch is True,so global batch size will be changed: global_batch_size = batch_size * data_parallel * micro_batch_interleave_num * gradient_accumulation_steps = 2 = 2 * 1 * 1 * 1

[INFO] 2024-05-06 07:25:38,177 [mindformers/trainer/base_trainer.py:383] create_network: .........Build Network From Config..........

[WARNING] 2024-05-06 07:25:38,178 [mindformers/models/llama/llama_config.py:182] __init__: Argument `pretrain_seqlen` is deprecated. Use `scaling_factor` instead.

[WARNING] 2024-05-06 07:25:38,178 [mindformers/models/llama/llama_config.py:185] __init__: Argument `compute_in_2d` is deprecated.

[WARNING] 2024-05-06 07:25:38,178 [mindformers/models/llama/llama_config.py:188] __init__: Argument `use_past_shard` is deprecated.

[INFO] 2024-05-06 07:25:38,178 [mindformers/version_control.py:60] decorator: The Cell Reuse compilation acceleration feature is not supported when the environment variable ENABLE_CELL_REUSE is 0 or MindSpore version is earlier than 2.1.0 or stand_alone mode or pipeline_stages <= 1

[INFO] 2024-05-06 07:25:38,178 [mindformers/version_control.py:64] decorator:

The current ENABLE_CELL_REUSE=0, please set the environment variable as follows:

export ENABLE_CELL_REUSE=1 to enable the Cell Reuse compilation acceleration feature.

[INFO] 2024-05-06 07:25:38,178 [mindformers/version_control.py:73] decorator: The Cell Reuse compilation acceleration feature only works in pipeline parallel mode(pipeline_stage>1).Current pipeline stage=1, the feature is disabled by default.

[WARNING] 2024-05-06 07:25:38,252 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,334 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,400 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,465 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,552 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,632 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,712 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,787 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,864 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-06 07:25:38,942 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[INFO] 2024-05-06 07:25:41,367 [mindformers/models/base_model.py:117] load_checkpoint: model built, but weights is unloaded, since the config has no checkpoint_name_or_path attribute or checkpoint_name_or_path is None.

[INFO] 2024-05-06 07:25:41,379 [mindformers/trainer/base_trainer.py:534] count_parameters: Network Parameters: 13896 M.

[INFO] 2024-05-06 07:25:41,819 [mindformers/trainer/utils.py:323] transform_and_load_checkpoint: .........Building model.........

[INFO] 2024-05-06 07:28:08,463 [mindformers/trainer/utils.py:714] load_ckpt: .............Start load checkpoint from checkpoint..................

[INFO] 2024-05-06 07:28:08,464 [mindformers/trainer/utils.py:245] load_distributed_checkpoint: When distributed loads are sliced weights,load_checkpoint should be a checkpoint directory containing the directory of rank_{0-*},The directory structure is as follows: **checkpoint_root_dir/rank_{0-*}/**.ckpt

[INFO] 2024-05-06 07:28:23,237 [mindformers/trainer/utils.py:258] load_distributed_checkpoint: Distribute load is success.

[INFO] 2024-05-06 07:28:28,109 [mindformers/trainer/utils.py:745] load_ckpt: Network parameters are not loaded: (['model.layers.0.attention.kvcache_mgr.key_past', 'model.layers.0.attention.kvcache_mgr.value_past', 'model.layers.1.attention.kvcache_mgr.key_past', 'model.layers.1.attention.kvcache_mgr.value_past', 'model.layers.2.attention.kvcache_mgr.key_past', 'model.layers.2.attention.kvcache_mgr.value_past', 'model.layers.3.attention.kvcache_mgr.key_past', 'model.layers.3.attention.kvcache_mgr.value_past', 'model.layers.4.attention.kvcache_mgr.key_past', 'model.layers.4.attention.kvcache_mgr.value_past', 'model.layers.5.attention.kvcache_mgr.key_past', 'model.layers.5.attention.kvcache_mgr.value_past', 'model.layers.6.attention.kvcache_mgr.key_past', 'model.layers.6.attention.kvcache_mgr.value_past', 'model.layers.7.attention.kvcache_mgr.key_past', 'model.layers.7.attention.kvcache_mgr.value_past', 'model.layers.8.attention.kvcache_mgr.key_past', 'model.layers.8.attention.kvcache_mgr.value_past', 'model.layers.9.attention.kvcache_mgr.key_past', 'model.layers.9.attention.kvcache_mgr.value_past', 'model.layers.10.attention.kvcache_mgr.key_past', 'model.layers.10.attention.kvcache_mgr.value_past', 'model.layers.11.attention.kvcache_mgr.key_past', 'model.layers.11.attention.kvcache_mgr.value_past', 'model.layers.12.attention.kvcache_mgr.key_past', 'model.layers.12.attention.kvcache_mgr.value_past', 'model.layers.13.attention.kvcache_mgr.key_past', 'model.layers.13.attention.kvcache_mgr.value_past', 'model.layers.14.attention.kvcache_mgr.key_past', 'model.layers.14.attention.kvcache_mgr.value_past', 'model.layers.15.attention.kvcache_mgr.key_past', 'model.layers.15.attention.kvcache_mgr.value_past', 'model.layers.16.attention.kvcache_mgr.key_past', 'model.layers.16.attention.kvcache_mgr.value_past', 'model.layers.17.attention.kvcache_mgr.key_past', 'model.layers.17.attention.kvcache_mgr.value_past', 'model.layers.18.attention.kvcache_mgr.key_past', 'model.layers.18.attention.kvcache_mgr.value_past', 'model.layers.19.attention.kvcache_mgr.key_past', 'model.layers.19.attention.kvcache_mgr.value_past', 'model.layers.20.attention.kvcache_mgr.key_past', 'model.layers.20.attention.kvcache_mgr.value_past', 'model.layers.21.attention.kvcache_mgr.key_past', 'model.layers.21.attention.kvcache_mgr.value_past', 'model.layers.22.attention.kvcache_mgr.key_past', 'model.layers.22.attention.kvcache_mgr.value_past', 'model.layers.23.attention.kvcache_mgr.key_past', 'model.layers.23.attention.kvcache_mgr.value_past', 'model.layers.24.attention.kvcache_mgr.key_past', 'model.layers.24.attention.kvcache_mgr.value_past', 'model.layers.25.attention.kvcache_mgr.key_past', 'model.layers.25.attention.kvcache_mgr.value_past', 'model.layers.26.attention.kvcache_mgr.key_past', 'model.layers.26.attention.kvcache_mgr.value_past', 'model.layers.27.attention.kvcache_mgr.key_past', 'model.layers.27.attention.kvcache_mgr.value_past', 'model.layers.28.attention.kvcache_mgr.key_past', 'model.layers.28.attention.kvcache_mgr.value_past', 'model.layers.29.attention.kvcache_mgr.key_past', 'model.layers.29.attention.kvcache_mgr.value_past', 'model.layers.30.attention.kvcache_mgr.key_past', 'model.layers.30.attention.kvcache_mgr.value_past', 'model.layers.31.attention.kvcache_mgr.key_past', 'model.layers.31.attention.kvcache_mgr.value_past', 'model.layers.32.attention.kvcache_mgr.key_past', 'model.layers.32.attention.kvcache_mgr.value_past', 'model.layers.33.attention.kvcache_mgr.key_past', 'model.layers.33.attention.kvcache_mgr.value_past', 'model.layers.34.attention.kvcache_mgr.key_past', 'model.layers.34.attention.kvcache_mgr.value_past', 'model.layers.35.attention.kvcache_mgr.key_past', 'model.layers.35.attention.kvcache_mgr.value_past', 'model.layers.36.attention.kvcache_mgr.key_past', 'model.layers.36.attention.kvcache_mgr.value_past', 'model.layers.37.attention.kvcache_mgr.key_past', 'model.layers.37.attention.kvcache_mgr.value_past', 'model.layers.38.attention.kvcache_mgr.key_past', 'model.layers.38.attention.kvcache_mgr.value_past', 'model.layers.39.attention.kvcache_mgr.key_past', 'model.layers.39.attention.kvcache_mgr.value_past'], [])

[WARNING] 2024-05-06 07:28:28,140 [mindformers/generation/text_generator.py:1099] generate: When do_sample is set to False, top_k will be set to 1 and top_p will be set to 0, making them inactive.

[INFO] 2024-05-06 07:28:28,141 [mindformers/generation/text_generator.py:1103] generate: Generation Config is: {'max_length': 512, 'max_new_tokens': None, 'num_beams': 1, 'do_sample': False, 'use_past': True, 'temperature': 1.0, 'top_k': 0, 'top_p': 1.0, 'repetition_penalty': 1, 'encoder_repetition_penalty': 1.0, 'renormalize_logits': False, 'pad_token_id': 0, 'bos_token_id': 1, 'eos_token_id': 2, '_from_model_config': True}

[INFO] 2024-05-06 07:28:28,141 [mindformers/generation/text_generator.py:176] _get_generation_mode: The generation mode will be **GREEDY_SEARCH**.

[INFO] 2024-05-06 07:33:01,158 [mindformers/generation/text_generator.py:478] _greedy_search: total time: 273.01722240448 s; generated tokens: 509 tokens; generate speed: 1.8643512505079523 tokens/s

[INFO] 2024-05-06 07:33:01,206 [mindformers/trainer/base_trainer.py:940] predict_process: output result is: [{'text_generation_text': ['<reserved_106>你是谁<reserved_107>犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬']}]

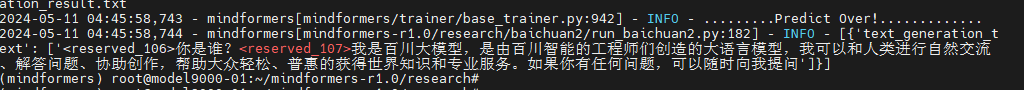

[INFO] 2024-05-06 07:33:01,207 [mindformers/trainer/base_trainer.py:941] predict_process: output result is saved at: text_generation_result.txt

[INFO] 2024-05-06 07:33:01,207 [mindformers/trainer/base_trainer.py:942] predict_process: .........Predict Over!.............

[INFO] 2024-05-06 07:33:01,207 [mindformers-r1.0/research/baichuan2/run_baichuan2.py:182] main: [{'text_generation_text': ['<reserved_106>你是谁<reserved_107>犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬犬']}]

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

<reserved_106>"你是谁"<reserved_107>或者<reserved_106>你是谁<reserved_107>,都不行,同样回答全是“犬”字。

完整的权重(约27G)、词表,均从本项目mindformers readme中提供的链接下载。

换成baichuan2-7b-chat,单卡推理回答正常:

8卡同样乱码:

[INFO] 2024-05-11 05:59:17,366 [mindformers/tools/utils.py:153] set_output_path: set output path to '/root/mindformers-r1.0/output'

[INFO] 2024-05-11 05:59:19,958 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:109] main: .........Build context config..........

[INFO] 2024-05-11 05:59:19,959 [mindformers/core/parallel_config.py:45] build_parallel_config: initial recompute_config from dict: {'recompute': True, 'select_recompute': False, 'parallel_optimizer_comm_recompute': False, 'mp_comm_recompute': True, 'recompute_slice_activation': True}

[INFO] 2024-05-11 05:59:19,959 [mindformers/core/parallel_config.py:51] build_parallel_config: initial parallel_config from dict: {'data_parallel': 1, 'model_parallel': 8, 'pipeline_stage': 1, 'micro_batch_num': 1, 'vocab_emb_dp': True, 'gradient_aggregation_group': 4}

[INFO] 2024-05-11 05:59:19,960 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:111] main: context config is: [ParallelConfig]

_recompute:[ParallelConfig]

_recompute:True

_select_recompute:False

_parallel_optimizer_comm_recompute:False

_mp_comm_recompute:True

_recompute_slice_activation:True

select_recompute:False

use_seq_parallel:False

_gradient_aggregation_group:4

_embed_dp_mp_config:[ParallelConfig]

_dp_mp_config:[ParallelConfig]

_data_parallel:1

_model_parallel:8

use_seq_parallel:False

select_recompute:False

_vocab_emb_dp:True

use_seq_parallel:False

select_recompute:False

_pp_config:[ParallelConfig]

_pipeline_stage:1

_micro_batch_num:1

_moe_config:[ParallelConfig]

_dpmp:[ParallelConfig]

_data_parallel:1

_model_parallel:8

use_seq_parallel:False

select_recompute:False

_expert_parallel:1

use_seq_parallel:False

select_recompute:False

[INFO] 2024-05-11 05:59:19,960 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:112] main: moe config is: <mindformers.modules.transformer.moe.MoEConfig object at 0xfffec76d5940>

[INFO] 2024-05-11 05:59:19,961 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:46] update_checkpoint_config: Leave load_checkpoint may because:

[INFO] 2024-05-11 05:59:19,961 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:47] update_checkpoint_config: 1. resume training need resume training info.

[INFO] 2024-05-11 05:59:19,961 [mindformers-r1.0/scripts/mf_parallel0/run_mindformer.py:48] update_checkpoint_config: 2. need load distributed shard checkpoint.

[INFO] 2024-05-11 05:59:19,961 [mindformers/trainer/base_trainer.py:85] __init__: Now Running Task is: text_generation, Model is: baichuan2_7b

[INFO] 2024-05-11 05:59:19,961 [mindformers/trainer/base_trainer.py:191] _check_global_batch_size_for_auto_parallel: The current parallel mode is semi_auto_parallel, full batch is True,so global batch size will be changed: global_batch_size = batch_size * data_parallel * micro_batch_interleave_num * gradient_accumulation_steps = 1 = 1 * 1 * 1 * 1

[INFO] 2024-05-11 05:59:19,962 [mindformers/trainer/base_trainer.py:383] create_network: .........Build Network From Config..........

[WARNING] 2024-05-11 05:59:19,962 [mindformers/models/llama/llama_config.py:185] __init__: Argument `compute_in_2d` is deprecated.

[INFO] 2024-05-11 05:59:19,964 [mindformers/version_control.py:60] decorator: The Cell Reuse compilation acceleration feature is not supported when the environment variable ENABLE_CELL_REUSE is 0 or MindSpore version is earlier than 2.1.0 or stand_alone mode or pipeline_stages <= 1

[INFO] 2024-05-11 05:59:19,965 [mindformers/version_control.py:64] decorator:

The current ENABLE_CELL_REUSE=0, please set the environment variable as follows:

export ENABLE_CELL_REUSE=1 to enable the Cell Reuse compilation acceleration feature.

[INFO] 2024-05-11 05:59:19,965 [mindformers/version_control.py:73] decorator: The Cell Reuse compilation acceleration feature only works in pipeline parallel mode(pipeline_stage>1).Current pipeline stage=1, the feature is disabled by default.

[WARNING] 2024-05-11 05:59:20,042 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,111 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,176 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,235 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,292 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,347 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,407 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,467 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,528 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[WARNING] 2024-05-11 05:59:20,588 [mindformers/modules/layers.py:554] shard: The user passed the custom defined activation function True. If the user want to enable shard for the activation cell, the user should set the shard for each primitives in the cell.

[INFO] 2024-05-11 05:59:21,936 [mindformers/models/base_model.py:117] load_checkpoint: model built, but weights is unloaded, since the config has no checkpoint_name_or_path attribute or checkpoint_name_or_path is None.

[INFO] 2024-05-11 05:59:21,946 [mindformers/trainer/base_trainer.py:534] count_parameters: Network Parameters: 7505 M.

[INFO] 2024-05-11 05:59:22,407 [mindformers/trainer/utils.py:323] transform_and_load_checkpoint: .........Building model.........

[INFO] 2024-05-11 06:01:46,209 [mindformers/trainer/utils.py:714] load_ckpt: .............Start load checkpoint from checkpoint..................

[INFO] 2024-05-11 06:01:46,210 [mindformers/trainer/utils.py:245] load_distributed_checkpoint: When distributed loads are sliced weights,load_checkpoint should be a checkpoint directory containing the directory of rank_{0-*},The directory structure is as follows: **checkpoint_root_dir/rank_{0-*}/**.ckpt

[INFO] 2024-05-11 06:01:53,984 [mindformers/trainer/utils.py:258] load_distributed_checkpoint: Distribute load is success.

[INFO] 2024-05-11 06:01:57,025 [mindformers/trainer/utils.py:745] load_ckpt: Network parameters are not loaded: (['model.layers.0.attention.kvcache_mgr.key_past', 'model.layers.0.attention.kvcache_mgr.value_past', 'model.layers.1.attention.kvcache_mgr.key_past', 'model.layers.1.attention.kvcache_mgr.value_past', 'model.layers.2.attention.kvcache_mgr.key_past', 'model.layers.2.attention.kvcache_mgr.value_past', 'model.layers.3.attention.kvcache_mgr.key_past', 'model.layers.3.attention.kvcache_mgr.value_past', 'model.layers.4.attention.kvcache_mgr.key_past', 'model.layers.4.attention.kvcache_mgr.value_past', 'model.layers.5.attention.kvcache_mgr.key_past', 'model.layers.5.attention.kvcache_mgr.value_past', 'model.layers.6.attention.kvcache_mgr.key_past', 'model.layers.6.attention.kvcache_mgr.value_past', 'model.layers.7.attention.kvcache_mgr.key_past', 'model.layers.7.attention.kvcache_mgr.value_past', 'model.layers.8.attention.kvcache_mgr.key_past', 'model.layers.8.attention.kvcache_mgr.value_past', 'model.layers.9.attention.kvcache_mgr.key_past', 'model.layers.9.attention.kvcache_mgr.value_past', 'model.layers.10.attention.kvcache_mgr.key_past', 'model.layers.10.attention.kvcache_mgr.value_past', 'model.layers.11.attention.kvcache_mgr.key_past', 'model.layers.11.attention.kvcache_mgr.value_past', 'model.layers.12.attention.kvcache_mgr.key_past', 'model.layers.12.attention.kvcache_mgr.value_past', 'model.layers.13.attention.kvcache_mgr.key_past', 'model.layers.13.attention.kvcache_mgr.value_past', 'model.layers.14.attention.kvcache_mgr.key_past', 'model.layers.14.attention.kvcache_mgr.value_past', 'model.layers.15.attention.kvcache_mgr.key_past', 'model.layers.15.attention.kvcache_mgr.value_past', 'model.layers.16.attention.kvcache_mgr.key_past', 'model.layers.16.attention.kvcache_mgr.value_past', 'model.layers.17.attention.kvcache_mgr.key_past', 'model.layers.17.attention.kvcache_mgr.value_past', 'model.layers.18.attention.kvcache_mgr.key_past', 'model.layers.18.attention.kvcache_mgr.value_past', 'model.layers.19.attention.kvcache_mgr.key_past', 'model.layers.19.attention.kvcache_mgr.value_past', 'model.layers.20.attention.kvcache_mgr.key_past', 'model.layers.20.attention.kvcache_mgr.value_past', 'model.layers.21.attention.kvcache_mgr.key_past', 'model.layers.21.attention.kvcache_mgr.value_past', 'model.layers.22.attention.kvcache_mgr.key_past', 'model.layers.22.attention.kvcache_mgr.value_past', 'model.layers.23.attention.kvcache_mgr.key_past', 'model.layers.23.attention.kvcache_mgr.value_past', 'model.layers.24.attention.kvcache_mgr.key_past', 'model.layers.24.attention.kvcache_mgr.value_past', 'model.layers.25.attention.kvcache_mgr.key_past', 'model.layers.25.attention.kvcache_mgr.value_past', 'model.layers.26.attention.kvcache_mgr.key_past', 'model.layers.26.attention.kvcache_mgr.value_past', 'model.layers.27.attention.kvcache_mgr.key_past', 'model.layers.27.attention.kvcache_mgr.value_past', 'model.layers.28.attention.kvcache_mgr.key_past', 'model.layers.28.attention.kvcache_mgr.value_past', 'model.layers.29.attention.kvcache_mgr.key_past', 'model.layers.29.attention.kvcache_mgr.value_past', 'model.layers.30.attention.kvcache_mgr.key_past', 'model.layers.30.attention.kvcache_mgr.value_past', 'model.layers.31.attention.kvcache_mgr.key_past', 'model.layers.31.attention.kvcache_mgr.value_past'], [])

[INFO] 2024-05-11 06:01:57,056 [mindformers/generation/text_generator.py:1103] generate: Generation Config is: {'max_length': 512, 'max_new_tokens': 64, 'num_beams': 1, 'do_sample': True, 'use_past': True, 'temperature': 1.0, 'top_k': 5, 'top_p': 0.85, 'repetition_penalty': 1.05, 'encoder_repetition_penalty': 1.0, 'renormalize_logits': False, 'pad_token_id': 0, 'bos_token_id': 1, 'eos_token_id': 2, '_from_model_config': True}

[INFO] 2024-05-11 06:01:57,057 [mindformers/generation/text_generator.py:174] _get_generation_mode: The generation mode will be **SAMPLE**.

[INFO] 2024-05-11 06:06:07,265 [mindformers/generation/text_generator.py:724] _sample: total time: 250.2076539993286 s; generated tokens: 64 tokens; generate speed: 0.255787538778377 tokens/s

[INFO] 2024-05-11 06:06:07,276 [mindformers/trainer/base_trainer.py:940] predict_process: output result is: [{'text_generation_text': ['I love beijing, because崛起版权归属崛起崛起背后版权归属崛起客客背后版权归属崛起崛起客规模背后背后客版权归属客客版权归属 migr版权归属背后版权归属背后客崛起初步背后版权归属崛起崛起规模版权归属崛起版权归属客版权归属背后崛起版权归属规模版权归属版权归属背后规模背后背后崛起崛起客规模背后规模版权归属背后崛起背后规模规模版权归属规模']}]

[INFO] 2024-05-11 06:06:07,277 [mindformers/trainer/base_trainer.py:941] predict_process: output result is saved at: text_generation_result.txt

[INFO] 2024-05-11 06:06:07,277 [mindformers/trainer/base_trainer.py:942] predict_process: .........Predict Over!.............

配置文件里model_config的repeat_penalty增大为1.05试试

登录 后才可以发表评论