| name | about | labels |

|---|---|---|

| Bug Report | Use this template for reporting a bug | kind/bug |

qwen 7b大模型bfloat16类型微调后推理报错

模型仓地址:https://gitee.com/mindspore/mindformers/blob/dev/research/qwen/qwen.md

Ascend/GPU/CPU) / 硬件环境:Please delete the backend not involved / 请删除不涉及的后端:

/device ascend

Software Environment / 软件环境 (Mandatory / 必填):

-- MindSpore version (e.g., 1.7.0.Bxxx) :

-- Python version (e.g., Python 3.7.5) :

-- OS platform and distribution (e.g., Linux Ubuntu 16.04):

-- GCC/Compiler version (if compiled from source):

master_20240417142516_74e1f3ea8 Milan_C17/20240414

Excute Mode / 执行模式 (Mandatory / 必填)(PyNative/Graph):

Please delete the mode not involved / 请删除不涉及的模式:

/mode graph

用例仓地址:MindFormers_Test/cases/qwen/7b/train/

用例:

test_mf_qwen_7b_infer_batch_incremental_1p_0001.py

网络推理成功

Traceback (most recent call last):

File "generate_infer.py", line 38, in <module>

outputs = yi_model.generate(inputs, generation_config=generation_config)

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/generation/text_generator.py", line 815, in generate

**model_kwargs)

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/generation/text_generator.py", line 915, in infer

**model_kwargs)

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/generation/text_generator.py", line 1010, in forward

res = self(**model_inputs) # pylint: disable=E1102

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindspore/nn/cell.py", line 680, in __call__

out = self.compile_and_run(*args, **kwargs)

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindspore/nn/cell.py", line 999, in compile_and_run

self.compile(*args, **kwargs)

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindspore/nn/cell.py", line 983, in compile

jit_config_dict=self._jit_config_dict, **kwargs)

File "/home/miniconda3/envs/ci/lib/python3.7/site-packages/mindspore/common/api.py", line 1590, in compile

result = self._graph_executor.compile(obj, args, kwargs, phase, self._use_vm_mode())

TypeError: For primitive[ApplyRotaryPosEmb], the input type must be same.

name:[cos]:Tensor[Float32].

name:[key]:Tensor[BFloat16].

name:[query]:Tensor[BFloat16].

name:[sin]:Tensor[Float32].

----------------------------------------------------

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/core/utils/check_convert_utils.cc:1114 CheckTypeSame

----------------------------------------------------

- The Traceback of Net Construct Code:

----------------------------------------------------

# 0 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:379

if self.use_past:

# 1 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:380

if not isinstance(batch_valid_length, Tensor):

# 2 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:382

if self.training:

# 3 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:385

tokens = input_ids

^

# 4 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:388

if not self.is_first_iteration:

# 5 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:392

if pre_gather:

# 6 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:390

output = self.model(tokens, batch_valid_length, batch_index, zactivate_len, block_tables, slot_mapping)

^

# 7 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:249

if self.use_past:

# 8 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:250

freqs_cis = (self.freqs_mgr.freqs_cos, self.freqs_mgr.freqs_sin, self.freqs_mgr.swap_mask)

^

# 9 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:390

output = self.model(tokens, batch_valid_length, batch_index, zactivate_len, block_tables, slot_mapping)

^

# 10 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:259

for i in range(self.num_layers):

# 11 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:260

h = self.layers[i](h, freqs_cis, mask, batch_valid_length=batch_valid_length, block_tables=block_tables,

^

# 12 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:510

if not self.use_past:

# 13 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama.py:260

h = self.layers[i](h, freqs_cis, mask, batch_valid_length=batch_valid_length, block_tables=block_tables,

^

# 14 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:517

h = self.attention(input_x, freqs_cis, mask, batch_valid_length, block_tables, slot_mapping)

^

# 15 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:249

if self.qkv_concat:

# 16 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:259

query = self.cast(self.wq(x), self.dtype) # dp, 1 -> dp, mp

^

# 17 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:264

if self.use_past:

# 18 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:265

freqs_cos, freqs_sin, _ = freqs_cis

# 19 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/models/llama/llama_transformer.py:266

context_layer = self.infer_attention(query, key, value, batch_valid_length, block_tables, slot_mapping,

^

# 20 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:286

if self.is_first_iteration:

# 21 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:287

position_ids = self.context_position_ids

^

# 22 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:290

if self.use_rope_rotary_emb:

# 23 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:291

query, key = self.apply_rotary_pos_emb(query, key, freqs_cos, freqs_sin, position_ids)

^

# 24 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:295

if self.is_first_iteration:

# 25 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:296

if self.input_layout == "BSH":

^

# 26 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:297

context_layer = self.flash_attention(query, key, value, attn_mask, alibi_mask)

^

# 27 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:296

if self.input_layout == "BSH":

^

# 28 In file /home/miniconda3/envs/ci/lib/python3.7/site-packages/mindformers/modules/infer_attention.py:291

query, key = self.apply_rotary_pos_emb(query, key, freqs_cos, freqs_sin, position_ids)

走给杨贵龙

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

感谢您的提问,您可以评论//mindspore-assistant更快获取帮助:

自测文档中所附脚本有bug

修正自测文档中的附带脚本

--- run_generate.py 2024-04-15 19:22:11.000000000 +0800

+++ fix/run_generate.py 2024-04-22 21:41:55.000000000 +0800

@@ -42,13 +42,17 @@

config_file_path = args.config

config = MindFormerConfig(config_file_path)

+ from mindformers.tools.check_rules import check_rules

+ check_rules(config, mode='predict')

+

config.context.device_id = args.device_id

build_context(config)

build_parallel_config(config)

tokenizer = QwenTokenizer(**config.processor.tokenizer)

- model_config = QwenConfig.from_pretrained(config_file_path)

- model_config.checkpoint_name_or_path = args.load_checkpoint

+ model_config = QwenConfig(**config.model.model_config)

+ if args.load_checkpoint:

+ model_config.checkpoint_name_or_path = args.load_checkpoint

model = QwenForCausalLM(model_config)

if config.model.model_config.pet_config:

已更新到自测文档(并上传到自测文档归档页面)

无

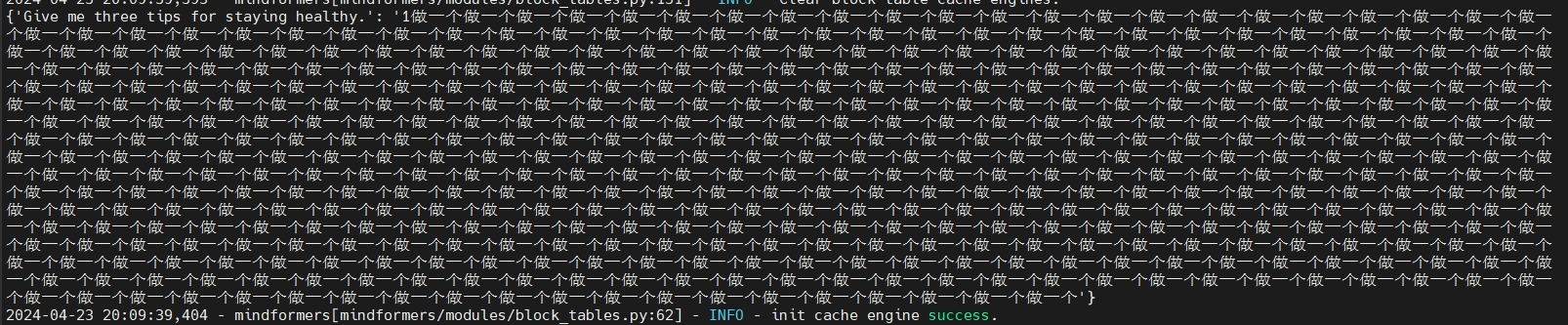

2024-04-22 17:34:16,773 - mindformers[mindformers/models/modeling_utils.py:1435] - INFO - weights in ./run/qwen_1.8b_base.ckpt are loaded

Please enter your predict data (type '/exit' to quit):

> 用python写一段快排代码

2024-04-22 17:34:22,430 - mindformers[mindformers/modules/block_tables.py:62] - INFO - init cache engine success.

2024-04-22 17:34:22,431 - mindformers[mindformers/generation/text_generator.py:678] - WARNING - When do_sample is set to False, top_k will be set to 1 and top_p will be set to 0, making th

em inactive.

2024-04-22 17:34:22,431 - mindformers[mindformers/generation/text_generator.py:682] - INFO - Generation Config is: {'max_length': 512, 'max_new_tokens': None, 'min_length': 0, 'min_new_tok

ens': None, 'num_beams': 1, 'do_sample': False, 'use_past': True, 'temperature': 1.0, 'top_k': 0, 'top_p': 1.0, 'repetition_penalty': 1, 'encoder_repetition_penalty': 1.0, 'renormalize_log

its': False, 'pad_token_id': 151643, 'bos_token_id': 1, 'eos_token_id': 151643, '_from_model_config': True}

2024-04-22 17:34:22,432 - mindformers[mindformers/generation/text_generator.py:233] - INFO - The generation mode will be **GREEDY_SEARCH**.

2024-04-22 17:34:41,769 - mindformers[mindformers/generation/text_generator.py:854] - INFO - total time: 19.336963176727295 s; generated tokens: 166 tokens; generate speed: 8.5845951343480

21 tokens/s

2024-04-22 17:34:41,772 - mindformers[mindformers/modules/block_tables.py:131] - INFO - Clear block table cache engines.

用python写一段快排代码

以下是一个使用Python实现的快速排序算法:

```python

def quicksort(arr):

if len(arr) <= 1:

return arr

pivot = arr[len(arr) // 2]

left = [x for x in arr if x < pivot]

middle = [x for x in arr if x == pivot]

right = [x for x in arr if x > pivot]

return quicksort(left) + middle + quicksort(right)

这个算法的时间复杂度为O(nlogn),其中n是待排序的元素个数。它使用递归实现,每次选择一个中间元素作为基准元素,然后将小于基准元素的元素放在左边,大于基准元素的元素放在右边。然后递归地对左右两

个子数组进行排序。

Please enter your predict data (type '/exit' to quit):

# Self-test Report & DT Review

是否需要补充ST/UT:否

原因:

# Self-test Report & DT Review

是否需要补充ST/UT:否

用例文件:

用例名:

回归版本:

master_20240423061516_781a38ddd6 Milan_C17/20240414

回归步骤:参考issue步骤

测试结论:回归通过,推理正常,推理结果不对已经另提单跟踪

回归人员:白梦真

回归时间:2024.04.23

登录 后才可以发表评论