| name | about | labels |

|---|---|---|

| Bug Report | Use this template for reporting a bug | kind/bug |

llama2_13b_lora在环境上微调后推理,网络推理失败

模型仓地址:https://gitee.com/mindspore/mindformers/blob/dev/configs/llama2/lora_llama2_13b.yaml

Ascend/GPU/CPU) / 硬件环境:Please delete the backend not involved / 请删除不涉及的后端:

/device ascend/

【CANN版本】:Milan_C18/20240517/

【MindSpore版本】:master_B521

【MindFormers版本】:master_B521

PyNative/Graph):Please delete the mode not involved / 请删除不涉及的模式:

/mode pynative

/mode graph

用例仓地址:MindFormers_Test/cases/llama2/13b/train/

用例:

test_mf_llama2_13b_lora_train_alpaca_8p_0001

网络推理成功

2024-05-19 15:21:09,478 - mindformers[mindformers/generation/text_generator.py:252] - INFO - The generation mode will be **GREEDY_SEARCH**.

2024-05-19 15:21:09,478 - mindformers[mindformers/generation/text_generator.py:93] - INFO - Set kbk infer :False

[CRITICAL] ANALYZER(2776580,ffff816e6020,python):2024-05-19-15:21:16.615.136 [mindspore/ccsrc/pipeline/jit/ps/static_analysis/prim.cc:1263] CheckArgsSizeAndType] For Operator[ReshapeAndCache], slot_mapping's type 'None' does not match expected type 'Tensor'.

The reason may be: lack of definition of type cast, or incorrect type when creating the node.

This exception is caused by framework's unexpected error. Please create an issue at https://gitee.com/mindspore/mindspore/issues to get help.

2024-05-19 15:21:21,535 - mindformers[mindformers/tools/cloud_adapter/cloud_monitor.py:43] - ERROR - Traceback (most recent call last):

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/tools/cloud_adapter/cloud_monitor.py", line 34, in wrapper

result = run_func(*args, **kwargs)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/run_mindformer.py", line 43, in main

trainer.predict(predict_checkpoint=config.load_checkpoint, input_data=config.input_data,

File "/home/miniconda3/envs/large_model_39/lib/python3.9/site-packages/mindspore/_checkparam.py", line 1372, in wrapper

return func(*args, **kwargs)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/trainer/trainer.py", line 692, in predict

output_result = self.trainer.predict(

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/trainer/causal_language_modeling/causal_language_modeling.py", line 343, in predict

return self.predict_process(config=config,

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/trainer/base_trainer.py", line 937, in predict_process

output_results = self.pipeline_task(input_data, top_k=top_k)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pipeline/base_pipeline.py", line 149, in __call__

outputs = self.run_multi(inputs, batch_size, preprocess_params, forward_params, postprocess_params)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pipeline/text_generation_pipeline.py", line 181, in run_multi

outputs.extend(self.run_single(item, preprocess_params,

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pipeline/base_pipeline.py", line 237, in run_single

model_outputs = self.forward(model_inputs, **forward_params)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pipeline/base_pipeline.py", line 303, in forward

return self._forward(model_inputs, **forward_params)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pipeline/text_generation_pipeline.py", line 197, in _forward

output_ids = self.network.generate(input_ids, **forward_params)

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/generation/text_generator.py", line 830, in generate

target_list, is_finished = self.infer(input_ids=input_ids,

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/generation/text_generator.py", line 952, in infer

res, current_index = self.forward(input_ids=input_ids,

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/generation/text_generator.py", line 1056, in forward

res = self._incremental_infer(

File "/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/generation/text_generator.py", line 306, in _incremental_infer

res = self(

File "/home/miniconda3/envs/large_model_39/lib/python3.9/site-packages/mindspore/nn/cell.py", line 696, in __call__

out = self.compile_and_run(*args, **kwargs)

File "/home/miniconda3/envs/large_model_39/lib/python3.9/site-packages/mindspore/nn/cell.py", line 1014, in compile_and_run

self.compile(*args, **kwargs)

File "/home/miniconda3/envs/large_model_39/lib/python3.9/site-packages/mindspore/nn/cell.py", line 997, in compile

_cell_graph_executor.compile(self, *self._compile_args, phase=self.phase,

File "/home/miniconda3/envs/large_model_39/lib/python3.9/site-packages/mindspore/common/api.py", line 1643, in compile

result = self._graph_executor.compile(obj, args, kwargs, phase, self._use_vm_mode())

TypeError: For Operator[ReshapeAndCache], slot_mapping's type 'None' does not match expected type 'Tensor'.

The reason may be: lack of definition of type cast, or incorrect type when creating the node.

----------------------------------------------------

- Framework Unexpected Exception Raised:

----------------------------------------------------

This exception is caused by framework's unexpected error. Please create an issue at https://gitee.com/mindspore/mindspore/issues to get help.

- C++ Call Stack: (For framework developers)

----------------------------------------------------

mindspore/ccsrc/pipeline/jit/ps/static_analysis/prim.cc:1263 CheckArgsSizeAndType

----------------------------------------------------

- The Traceback of Net Construct Code:

----------------------------------------------------

# 0 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pet/pet_model.py:79

return self.pet_model(input_ids, labels, input_position, position_ids, attention_mask, input_embeds,

# 1 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pet/models/lora.py:72

return self.lora_model(input_ids=input_ids,

# 2 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:357

if self.use_past:

# 3 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pet/models/lora.py:72

return self.lora_model(input_ids=input_ids,

^

# 4 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:358

if not isinstance(batch_valid_length, Tensor):

# 5 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:360

if self.training:

# 6 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:363

tokens = input_ids

^

# 7 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/pet/models/lora.py:72

return self.lora_model(input_ids=input_ids,

^

# 8 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:366

if not self.is_first_iteration:

# 9 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:370

if pre_gather:

# 10 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:368

output = self.model(tokens, batch_valid_length, batch_index, zactivate_len, block_tables, slot_mapping)

^

# 11 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:193

if self.use_past:

# 12 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:194

if self.is_first_iteration:

# 13 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:195

freqs_cis = self.freqs_mgr.prefill(bs, seq_len)

^

# 14 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:194

if self.is_first_iteration:

# 15 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:368

output = self.model(tokens, batch_valid_length, batch_index, zactivate_len, block_tables, slot_mapping)

^

# 16 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:206

for i in range(self.num_layers):

# 17 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:207

h = self.layers[i](h, freqs_cis, mask, batch_valid_length=batch_valid_length, block_tables=block_tables,

^

# 18 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:501

if not self.use_past:

# 19 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama.py:207

h = self.layers[i](h, freqs_cis, mask, batch_valid_length=batch_valid_length, block_tables=block_tables,

^

# 20 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:508

h = self.attention(input_x, freqs_cis, mask, batch_valid_length, block_tables, slot_mapping)

^

# 21 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:248

if self.qkv_concat:

# 22 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:258

query = self.cast(self.wq(x), self.dtype) # dp, 1 -> dp, mp

^

# 23 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:263

if self.use_past:

# 24 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:264

freqs_cos, freqs_sin, _ = freqs_cis

# 25 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/models/llama/llama_transformer.py:265

context_layer = self.infer_attention(query, key, value, batch_valid_length, block_tables, slot_mapping,

^

# 26 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:281

if self.use_rope_rotary_emb:

# 27 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:283

freqs_cos = self.cast(freqs_cos, mstype.float16)

# 28 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:289

if self.is_first_iteration:

# 29 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:290

if self.input_layout == "BSH":

^

# 30 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:291

context_layer = self.flash_attention(query, key, value, attn_mask, alibi_mask)

^

# 31 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:290

if self.input_layout == "BSH":

^

# 32 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/infer_attention.py:286

key_out = self.paged_attention_mgr(key, value, slot_mapping)

^

# 33 In file /data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/mindformers/modules/paged_attention_mgr.py:61

return self.reshape_and_cache(key, value, self.key_cache, self.value_cache, slot_mapping)

^

(See file '/data/jenkins_workspace/TDT_deployment/MindFormers_Test/cases/llama2/13b/train/test_mf_llama2_13b_lora_train_alpaca_8p_0001/rank_0/om/analyze_fail.ir' for more details. Get instructions about `analyze_fail.ir` at https://www.mindspore.cn/search?inputValue=analyze_fail.ir)

走给倪钰鑫

Please assign maintainer to check this issue.

请为此issue分配处理人。

@zhangjie18

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

感谢您的提问,您可以评论//mindspore-assistant更快获取帮助:

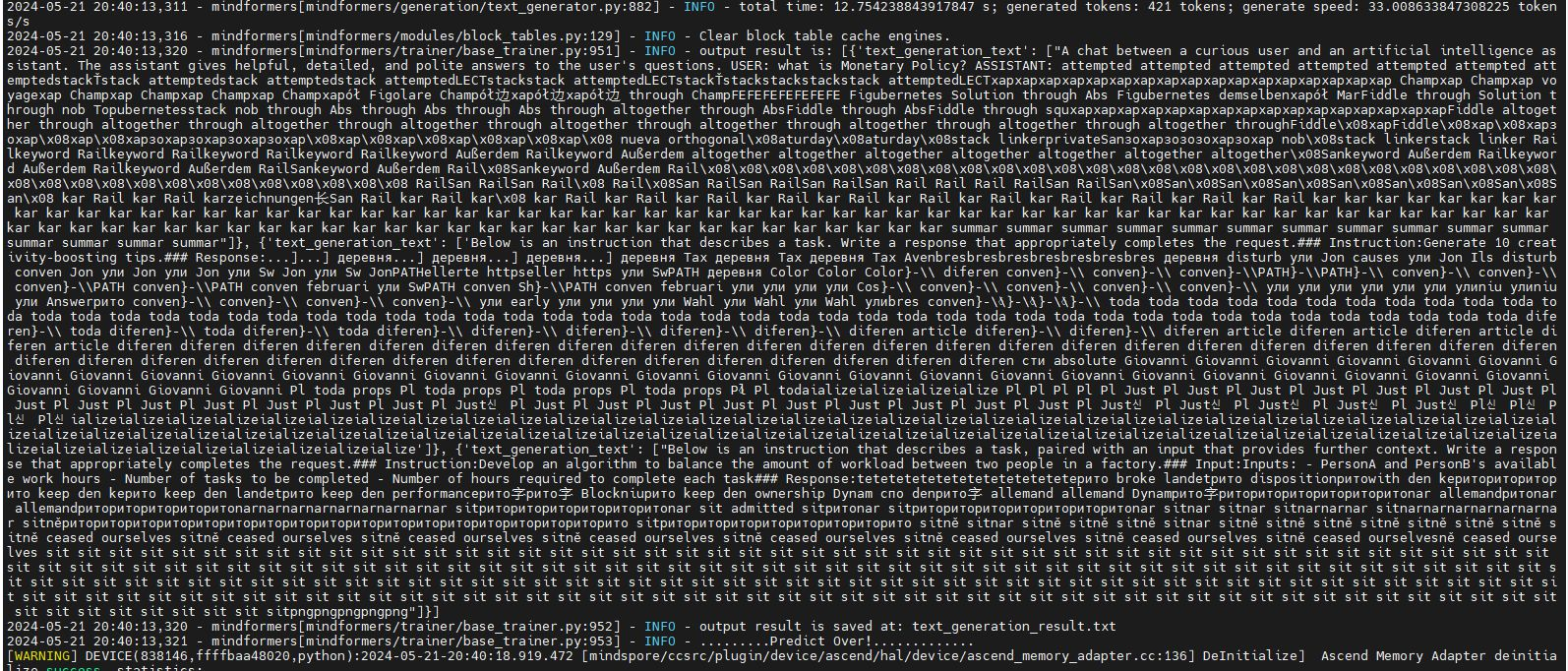

根因:lora推理没走kbk流程

已修复,见pr https://gitee.com/mindspore/mindformers/pulls/3113

回归版本:【CANN版本】:Milan_C18/20240517/

【MindSpore版本】:MindSpore_master_659b2536(MindSporeDaily)

【MindFormers版本】:dev_20240521121521_2df1290c073e4

回归步骤:参考issue步骤

基本问题:功能问题已解决

备注:推理结果乱码是因为没有加载权重,进行推理的,校验功能问题是否解决

测试结论:回归通过

回归时间:2024.5.22

登录 后才可以发表评论