test_ops_add_layernorm.py::test_add_layer_norm failed in gate

Ascend/GPU/CPU) / 硬件环境:Please delete the backend not involved / 请删除不涉及的后端:

/device ascend910B

Software Environment / 软件环境 (Mandatory / 必填):

-- MindSpore version (e.g., 1.7.0.Bxxx) :

-- Python version (e.g., Python 3.7.5) :

-- OS platform and distribution (e.g., Linux Ubuntu 16.04):

-- GCC/Compiler version (if compiled from source):

Excute Mode / 执行模式 (Mandatory / 必填)(PyNative/Graph):

Please delete the mode not involved / 请删除不涉及的模式:

/mode pynative

/mode graph

[gate failed]tests/st/ops

test_ops_add_layernorm.py::test_add_layer_norm

[2024-06-11T10:11:21.505Z] tensor_type = mindspore.bfloat16

[2024-06-11T10:11:21.505Z]

[2024-06-11T10:11:21.505Z] @pytest.mark.level0

[2024-06-11T10:11:21.505Z] @pytest.mark.env_onecard

[2024-06-11T10:11:21.505Z] @pytest.mark.platform_arm_ascend910b_training

[2024-06-11T10:11:21.505Z] @pytest.mark.platform_x86_ascend910b_training

[2024-06-11T10:11:21.505Z] @pytest.mark.parametrize('tensor_type', [mstype.float32, mstype.float16, mstype.bfloat16])

[2024-06-11T10:11:21.505Z] def test_add_layer_norm(tensor_type):

[2024-06-11T10:11:21.505Z] """

[2024-06-11T10:11:21.505Z] Feature: test add_layernorm fusion in kbk mode

[2024-06-11T10:11:21.505Z] Description: test add_layernorm.

[2024-06-11T10:11:21.505Z] Expectation: the result is the same with aclnn version of two ops

[2024-06-11T10:11:21.505Z] """

[2024-06-11T10:11:21.505Z] os.environ["MS_DISABLE_INTERNAL_KERNELS_LIST"] = "AddLayerNorm"

[2024-06-11T10:11:21.505Z] context.set_context(mode=0)

[2024-06-11T10:11:21.505Z]

[2024-06-11T10:11:21.505Z] x1 = generate_random_input((2, 3), np.float32)

[2024-06-11T10:11:21.505Z] x2 = generate_random_input((2, 3), np.float32)

[2024-06-11T10:11:21.505Z] gamma = np.ones([3]).astype(np.float32)

[2024-06-11T10:11:21.505Z] beta = np.zeros([3]).astype(np.float32)

[2024-06-11T10:11:21.505Z] x1_tensor = Tensor(x1, dtype=tensor_type)

[2024-06-11T10:11:21.505Z] x2_tensor = Tensor(x2, dtype=tensor_type)

[2024-06-11T10:11:21.505Z] gamma_tensor = Tensor(gamma, dtype=tensor_type)

[2024-06-11T10:11:21.505Z] beta_tensor = Tensor(beta, dtype=tensor_type)

[2024-06-11T10:11:21.505Z]

[2024-06-11T10:11:21.505Z] net = Add_LayerNorm()

[2024-06-11T10:11:21.506Z] net.set_jit_config(JitConfig(jit_level="O0", infer_boost="on"))

[2024-06-11T10:11:21.506Z] output = net(x1_tensor, x2_tensor, gamma_tensor, beta_tensor)

[2024-06-11T10:11:21.506Z]

[2024-06-11T10:11:21.506Z] expect = generate_expect_forward_output(x1, x2, gamma, beta)

[2024-06-11T10:11:21.506Z] assert np.allclose(output[0].float().asnumpy(), expect[0], rtol=5e-3, atol=5e-3)

[2024-06-11T10:11:21.506Z] assert np.allclose(output[1].float().asnumpy(), expect[1], rtol=5e-3, atol=5e-3)

[2024-06-11T10:11:21.506Z] > assert np.allclose(output[2].float().asnumpy(), expect[2], rtol=5e-3, atol=5e-3)

[2024-06-11T10:11:21.506Z] E assert False

[2024-06-11T10:11:21.506Z] E + where False = <function allclose at 0xfffed6cc7710>(array([[5.3118715 ],\n [0.91416574]], dtype=float32), array([[5.2235823],\n [0.9144495]], dtype=float32), rtol=0.005, atol=0.005)

[2024-06-11T10:11:21.506Z] E + where <function allclose at 0xfffed6cc7710> = np.allclose

[2024-06-11T10:11:21.506Z] E + and array([[5.3118715 ],\n [0.91416574]], dtype=float32) = <bound method Tensor.asnumpy of Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]])>()

[2024-06-11T10:11:21.506Z] E + where <bound method Tensor.asnumpy of Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]])> = Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]]).asnumpy

[2024-06-11T10:11:21.506Z] E + where Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]]) = <bound method Tensor.float of Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]])>()

[2024-06-11T10:11:21.506Z] E + where <bound method Tensor.float of Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]])> = Tensor(shape=[2, 1], dtype=Float32, value=\n[[ 5.31187153e+00],\n [ 9.14165735e-01]]).float

[2024-06-11T10:11:21.506Z]

[2024-06-11T10:11:21.506Z] test_ops_add_layernorm.py:81: AssertionError

[2024-06-11T10:11:21.506Z] =============================== warnings summary ===============================

此处可能存在不合适展示的内容,页面不予展示。您可通过相关编辑功能自查并修改。

如您确认内容无涉及 不当用语 / 纯广告导流 / 暴力 / 低俗色情 / 侵权 / 盗版 / 虚假 / 无价值内容或违法国家有关法律法规的内容,可点击提交进行申诉,我们将尽快为您处理。

感谢您的提问,您可以评论//mindspore-assistant更快获取帮助:

问题原因:融合算子计算方式和numpy函数有细微差别,少数随机函数生成数据会产生误差

解决方案:固定输入输出,使用不会产生随机误差的输入

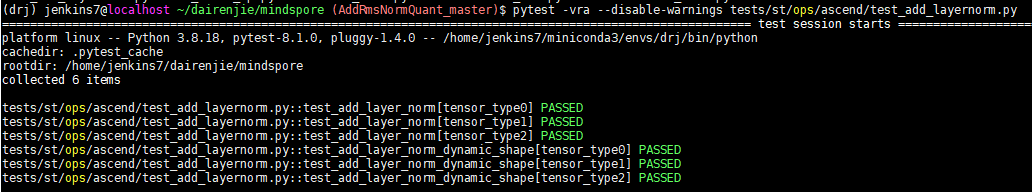

修改结果:

br_base上的st/ops下test_ops_add_layernorm.py::test_add_layer_norm用例在master上没有

master上st/ops/ascend下的test_add_layernorm.py被上至level0

回归通过

戴仁杰 2024-06-18 09:17

se说br_base要被覆盖掉,不让往br_base上合

登录 后才可以发表评论